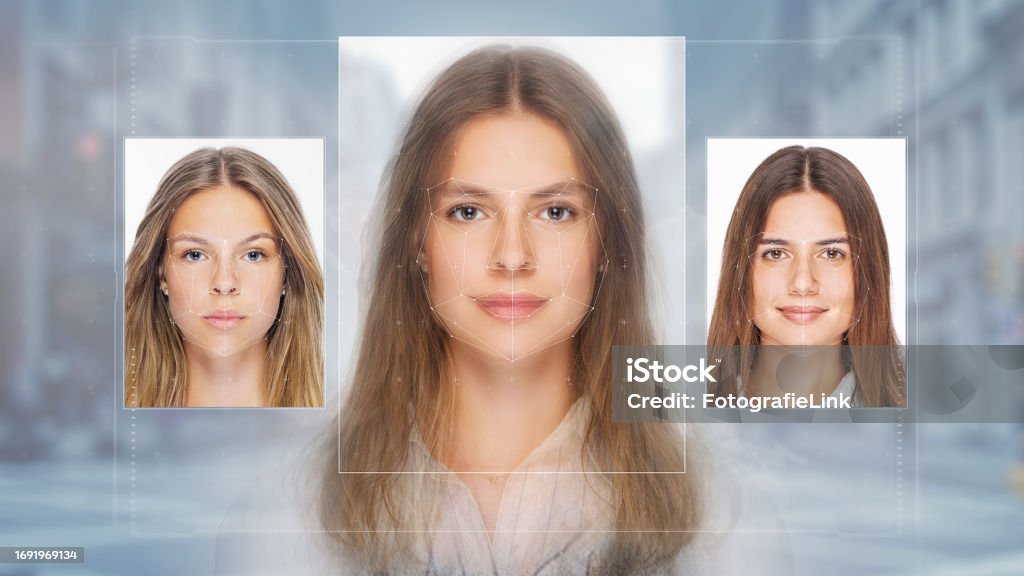

Deepfake technology refers to advanced artificial intelligence capabilities that allow for the high-fidelity synthesis of photo-realistic media. Specifically, deepfakes leverage generative adversarial networks (GANs) to swap people’s faces/voices or even generate entirely fake video/audio content that looks and sounds authentic.

While deepfakes have many positive applications, such as enhancing visual effects in films or allowing people with disabilities to better communicate, they also enable concerning forms of media manipulation with implications for privacy and consent. This article explores some of the key ways deepfakes challenge traditional notions of personal privacy and consent.

Enabling Nonconsensual Explicit Media

One of the most problematic applications of deepfake technology is to create nonconsensual, sexually explicit media. Apps have emerged that allow anyone to upload a photo of a person to generate a deepnude image. Similarly, pornographic videos featuring high-profile celebrities, politicians, and other public figures have proliferated without their permission.

The creation and distribution of this kind of content completely disregards consent and fundamentally violates personal privacy. Once a deepfake of this nature is released online, it can be almost impossible to contain or remove. This forces victims to lose control over their public image which can enable follow-on reputational, psychological, or even physical harms.

Policies around consent and permitted media usage must adapt to ban this practice and better protect people, especially women who are disproportionately targeted in these attacks. they are defining explicit deepfakes as a form of sexual abuse or harassment. Technology companies also bear responsibility for detection and takedowns where possible.

Impersonation and Fraud

In addition to nonconsensual intimate imagery, deepfakes may also enable impersonation or fraud. Manipulated audio/video could allow criminals to imitate someone’s voice or likeness to extract finances or sensitive personal information from unsuspecting victims.

Imagine receiving an email from your manager requesting an urgent wire transfer, along with a video clip of them explaining the details. Or getting a call that appears to be from your bank, asking you to confirm your social security number. As deepfake technology gets more advanced, average people will be less equipped to reliably authenticate these kinds of requests.

Once again, policy plays an important role here in defining and outlawing unauthorized impersonation. Citizens also need awareness of the existence of deepfakes and best practices for verifying questionable communications through secondary channels before taking consequential actions. There may also be a role for technological solutions to help automatically detect anomalous digital activity.

Inappropriate Data Usage

A great deal of personal data is required to train deep learning models – the algorithms underlying deepfakes and other AI synthesis techniques. This data is meant to remain private, used only with individual consent solely for model development. However, violations of data privacy can and do occur.

For example, a recent study found that 99% of face image datasets likely violate terms of use in how they collect, label, and manage people’s photos. Mishandling training data that includes personal information like faces or voices severely infringes on individual privacy while enabling downstream harmful applications.

Establishing clear data regulations, auditing procedures, and accountability around the use of private data for research purposes is critical. Comprehensive governance can enforce responsible data usage all along the deep learning supply chain, from academic data gathering to commercial model training and organizational deployment.

Surveillance and Tracking

Finally, advances in generative media raise concerns around surveillance and tracking. Sophisticated deepfake technology will soon enable real-time facial/vocal mimicry. Combined with technologies like augmented reality glasses, this could allow covert recording or streaming without consent. Imagine walking around while your likeness is synthesized saying or doing things without your knowledge.

Governments or private companies could also abuse these capabilities and synthesize data to track citizens or quietly monitor spaces to a degree that markedly exceeds reasonable expectations of privacy. And while facial/vocal synthesized surveillance may not be widespread yet, the emerging potential poses an alarming threat to civil liberties.

Once again, the onus is on legislators and regulators to restrict unacceptable applications of novel generative media and sensing capabilities that can otherwise silently erode personal freedoms. Citizens should also push back against technologies or policies that seem aimed at diminishing privacy under the guise of innovation or security.

The Role of Awareness and Education

As deepfake technology grows more powerful and accessible, people across all domains – policymakers, technologists, media organizations, and average citizens – need better awareness of responsible development and usage. Specifically, how applications of novel generative media and AI can challenge privacy and consent if left unchecked.

Some proactive initiatives already underway include:

- Media literacy programs to help identify manipulated video/audio and avoid potential manipulation tactics

- Labeling policies for synthesized content across social media platforms and news publishers

- Academic ethics boards monitor appropriate data usage and model development protocols

- Industry standards around permissible usage of generative AI capabilities

Comprehensive education and training will empower both creators and consumers of AI-synthesized media. This can cultivate a shared understanding of how applications should progress responsibly by considering their implications for personal consent, dignity, and privacy.

Ongoing dialogue and coordinated action are crucial as the pace of technological development continues to accelerate. Setting ethical norms and reasonable policies now around consent and privacy in generative media can help promote innovation in the public interest rather than erosions of personal agency via informational asymmetries.

Fundamentally, deepfake technology brings increasingly believable and scalable methods for appropriating, imitating, and manipulating human images, voices, behaviors, and other personal information. Without considered constraints, consent violations, impersonation, fraud, surveillance overreach, and other privacy threats quickly emerge.

However, thoughtful governance and public awareness offer promise for realizing the benefits of generative AI while upholding core civil liberties around consent, dignity, and personal freedom.